Differing benefits of artificial intelligence-based computer-aided diagnosis for breast US according to workflow and experience level

Article information

Abstract

Purpose

This study evaluated how artificial intelligence-based computer-assisted diagnosis (AI-CAD) for breast ultrasonography (US) influences diagnostic performance and agreement between radiologists with varying experience levels in different workflows.

Methods

Images of 492 breast lesions (200 malignant and 292 benign masses) in 472 women taken from April 2017 to June 2018 were included. Six radiologists (three inexperienced [<1 year of experience] and three experienced [10-15 years of experience]) individually reviewed US images with and without the aid of AI-CAD, first sequentially and then simultaneously. Diagnostic performance and interobserver agreement were calculated and compared between radiologists and AI-CAD.

Results

After implementing AI-CAD, the specificity, positive predictive value (PPV), and accuracy significantly improved, regardless of experience and workflow (all P<0.001, respectively). The overall area under the receiver operating characteristic curve significantly increased in simultaneous reading, but only for inexperienced radiologists. The agreement for Breast Imaging Reporting and Database System (BI-RADS) descriptors generally increased when AI-CAD was used (κ=0.29-0.63 to 0.35-0.73). Inexperienced radiologists tended to concede to AI-CAD results more easily than experienced radiologists, especially in simultaneous reading (P<0.001). The conversion rates for final assessment changes from BI-RADS 2 or 3 to BI-RADS higher than 4a or vice versa were also significantly higher in simultaneous reading than sequential reading (overall, 15.8% and 6.2%, respectively; P<0.001) for both inexperienced and experienced radiologists.

Conclusion

Using AI-CAD to interpret breast US improved the specificity, PPV, and accuracy of radiologists regardless of experience level. AI-CAD may work better in simultaneous reading to improve diagnostic performance and agreement between radiologists, especially for inexperienced radiologists.

Introduction

Ultrasonography (US) is commonly used to evaluate breast abnormalities, especially those that are detected on mammography. Although US has many advantages over mammography, such as being easily available, radiation-free, and cost-effective, it has relatively lower specificity and positive predictive value (PPV) than mammography, which can lead to false-positive recalls and unnecessary biopsies [1]. US examinations and interpretations of US images also rely on the experience level of the examiner and are well-known to be operator-dependent [2]. To overcome observer variability and improve the overall diagnostic performance of breast US, artificial intelligence-based computer-assisted diagnosis (AI-CAD) programs have recently been developed and implemented in clinical practice [3-5].

Several previous studies have demonstrated that the integration of AI-CAD into US improves radiologists’ diagnostic performance [6-8], with most US examinations being performed by dedicated breast radiologists from single institutions. However, performers with different training or practice backgrounds and different levels of experience perform and interpret breast US in everyday clinical practice [6,8,9]. To the best of the authors’ knowledge, no studies have focused on the analytic results of radiologists from multiple institutions using AI-CAD for breast US. It has also been suggested that diagnostic performance may differ according to the step of US interpretation where AI-CAD is introduced [7], but currently many users refer to AI-CAD arbitrarily, and the stage at which AI-CAD is most effective has not yet been established. Representatively, radiologists may refer to AI-CAD after making a conclusion about a US-detected lesion, which is termed sequential reading, or they may refer to AI-CAD before making a conclusion, which is termed simultaneous reading [7]. Considering that radiologists reach a conclusion by combining various US features, it was hypothesized that the timing of providing AI-CAD results during US image interpretation may have an impact on the final assessment.

Therefore, the purpose of this study was to evaluate and compare diagnostic performance and agreements among radiologists with various levels of training and experience when AI-CAD was used to interpret breast US in different workflows.

Materials and Methods

Compliance with Ethical Standards

This retrospective study was approved by the institutional review board (IRB) of Severance Hospital, Seoul, Korea (1-2019-0027), with a waiver for informed consent.

Data Collection

From April 2017 to June 2018, US images of 639 breast masses in 611 consecutive women were obtained using a dedicated US unit, in which AI-CAD analysis was possible (S-Detect for Breast, Samsung Medison, Co., Ltd., Seoul, Korea). The US images were then reviewed to see if they were of adequate image quality for CAD analysis, and a total of 492 breast lesions (292 benign and 200 malignant masses) in 472 women were finally included for review according to the following indications: (1) masses that were pathologically confirmed with US-guided biopsy or surgery or (2) masses that had been followed for more than 2 years after showing benign features on US (Table 1). The proportion of benign and malignant masses used in preceding research to evaluate the performance of AI-CAD was used to select the 492 breast masses in the present study [10]. The mean age of the 472 women was 49.4±10.1 years (range, 25 to 90 years). The mean size of the 492 breast masses was 14.2±7.5 mm (range, 4 to 48 mm). Of the 492 breast masses, 409 breast lesions (83.1%) were pathologically diagnosed with US-guided core-needle biopsy (n=155), vacuum-assisted excision (n=12), and/or surgery (n=242). Eighty-three lesions (16.9%) were included based on typically benign US findings that were stable for more than 2 years.

US images were obtained using a 3-12A linear transducer (RS80A, Samsung Medison, Co., Ltd.). Two staff radiologists (J.H.Y. and E-K. K., 10 and 22 years of experience in breast imaging, respectively) acquired the images. During real-time imaging, representative images of breast masses were recorded and used for the AI-CAD analysis. Images were converted into Digital Imaging and Communications in Medicine files and stored on separate hard drives for individual image analysis. Basic information on the AI-CAD software is provided in Supplementary Data 1.

Experience Level of the Radiologists and Workflow with AI-CAD

For the reader study, three inexperienced radiologists (two radiology residents and one fellow: J.Y., second-year resident; M.R., third-year resident; S.E.L., fellow with less than 1 year of experience in breast imaging) and three experienced breast-dedicated radiologists from different institutions (J.H.Y., J.E.L., and J-Y.H. with 15, 13, and 10 years of experience, respectively) participated in this study. AI-CAD software was set up on each personal computer and each radiologist was initially given 10 separate test images that were not included in the image set for review in order to familiarize themselves with AI-CAD. After the image was displayed with the AI-CAD program, a target point was set at the center of the breast mass by each radiologist, and the program automatically produced a region of interest (ROI) based on the target point. If the ROI was considered inaccurate for analysis by the radiologist, it was adjusted manually. US characteristics according to the Breast Imaging Reporting and Database System (BI-RADS) lexicon, and the final assessments of the masses were automatically analyzed and visualized by the AI-CAD program (Fig. 1). Based on the above data, the AI-CAD program assessed lesions as possibly benign or possibly malignant.

Representative image showing how AI-CAD (S-Detect for Breast) operates.

After the program displays an image for analysis, a target point (green dot on A) is set in the mass center. By clicking the "Calculate" button on the left column of the screen display, a region of interest is automatically drawn along the mass border, with US features (right column) and the final assessment (top blue box) being displayed accordingly (B). AI-CAD, artificial intelligence-based computer-assisted diagnosis.

Each radiologist individually evaluated the US images of all 492 breast masses with two separate workflows, sequential reading and simultaneous reading, which took place 4 weeks apart for washout. During sequential reading, each radiologist initially evaluated each of the 492 breast masses according to the BI-RADS lexicon and masses were assigned final assessments from BI-RADS 2 to 5. The radiologists then executed AI-CAD to obtain stand-alone results, which were separately recorded for data analysis. After referring to the analytic results of AI-CAD, each radiologist was asked to reassess the BI-RADS lexicon and final assessment categories, which were also individually recorded for analysis.

During simultaneous reading, radiologists were presented with all 492 images, but in random order, and the AI-CAD results of previous sequential reading were given to the radiologists before image review. As with sequential reading, each radiologist reviewed and recorded data according to the BI-RADS lexicon and final assessments (Fig. 2). Radiologists were blinded to the final pathologic diagnoses of the breast masses and did not have access to clinical patient information or images from mammography or prior US examinations.

Statistical Analysis

The final assessments based on US BI-RADS were divided into two groups for statistical analysis: negative (BI-RADS 2 and 3) and positive (BI-RADS 4a to 5). The diagnostic performance of the radiologists without the assistance of AI-CAD, (unaided [U]), with AI-CAD stand-alone (A), and with AI-CAD during sequential reading (R1) and simultaneous reading (R2) was quantified in terms of sensitivity, specificity, PPV, negative predictive value (NPV), and accuracy. Logistic regression with the generalized estimating equation (GEE) method was used to compare diagnostic performance. The area under the receiver operating characteristic curve (AUC) was acquired and compared using the multi-reader multi-case receiver operating characteristic method developed by Obuchowski and Rockette [11]. The conversion rate was defined as the rate of number of changes in final assessments between unaided (U) and aided (R1 and R2, respectively) readings among the total assessments by all six readers, and each subgroup of three inexperienced readers and three experienced readers.

The Fleiss kappa (κ) was calculated to analyze the interobserver agreement between radiologists for US descriptors and final assessments, and the Cohen κ was calculated to analyze the agreement between radiologists and AI-CAD. The κ values were interpreted as follows: 0.00-0.20, slight agreement; 0.21-0.40, fair agreement; 0.41-0.60, moderate agreement; 0.61-0.80, substantial agreement; and 0.81-1.00, excellent agreement [12]. Logistic regression with the GEE method was used to compare how final assessments changed with the aid of AI-CAD according to each workflow and the radiologists’ experience level.

Statistical analyses were performed using SAS version 9.4 (SAS Inc., Cary, NC, USA). All tests were two-sided, and P-values of less than 0.05 were considered to indicate statistical significance.

Results

Diagnostic Performance of Radiologists after Implementation of AI-CAD

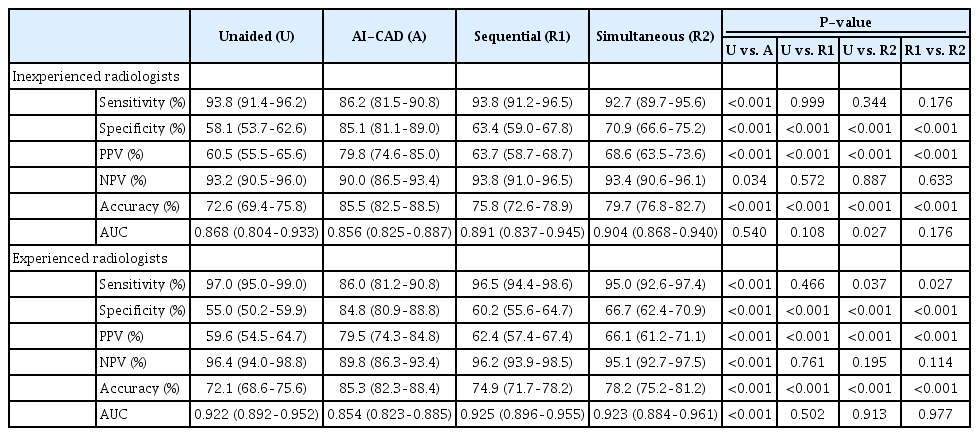

Table 2 summarizes the overall diagnostic performance of the six radiologists and AI-CAD. The AI-CAD program itself showed higher specificity (84.9% vs. 56.6%, P<0.001), PPV (79.7% vs. 60.1%, P<0.001), and accuracy (85.4% vs. 72.4%, P<0.001), with lower sensitivity (86.1% vs. 95.4%, P<0.001) and NPV (89.9% vs. 94.7%, P=0.002) than the radiologists. The AUC was lower with AI-CAD than for the unaided radiologists, but without statistical significance (0.855 vs. 0.895, P=0.050). After applying AI-CAD, the specificity, PPV, and accuracy of the radiologists significantly improved in both sequential reading and simultaneous reading (all P<0.001). When simultaneous reading was compared to sequential reading, specificity, PPV, and accuracy were significantly higher in simultaneous reading (all P<0.001) for both the experienced and inexperienced radiologists. The AUC did not significantly improve after AI-CAD was implemented in both the sequential and simultaneous reading workflows, with changes from 0.908 and 0.913 to 0.895, respectively (P=0.093 and P=0.099, respectively).

Diagnostic Performance of Radiologists According to Experience Level after Implementation of AI-CAD

When the radiologists were divided according to experience level, specificity, PPV, and accuracy significantly improved in both the experienced and inexperienced groups for both sequential and simultaneous reading (all P<0.05, respectively) (Table 3). For the inexperienced radiologists, the AUC increased from 0.868 to 0.891, but without statistical significance, in sequential reading (P=0.108), while it significantly improved from 0.868 to 0.904 in simultaneous reading (P=0.027) (Fig. 3). For the experienced radiologists, the AUC did not show a significant improvement in either sequential or simultaneous reading (P=0.502 and P=0.913).

Representative cases of inexperienced readers with each result by sequential and simultaneous reading.

A. US image of a 56-year-old woman diagnosed with a 16-mm invasive ductal carcinoma is shown. The inexperienced reader initially diagnosed the lesion as BI-RADS 3, and did not change the result after referring to the AI-CAD result of "possibly malignant" in sequential reading. However, after the washout period, the reader diagnosed the lesion as BI-RADS 4a based on simultaneous reading with AI-CAD. B. US image of a 47-year-old woman diagnosed with a 12-mm fibroadenoma is shown. An inexperienced reader initially diagnosed the lesion as BI-RADS 4a, and did not change the result after referring to the AI-CAD result of "possibly benign" in sequential reading. However, after the washout period, the reader diagnosed the lesion as BI-RADS 3 based on simultaneous reading with AI-CAD. US, ultrasonography; BI-RADS, Breast Imaging Reporting and Data System; AI-CAD, artificial intelligence-based computer-assisted diagnosis.

As for changes in the final assessments after AI-CAD was integrated into breast US, significantly higher proportions of changes were seen in simultaneous reading than in sequential reading (overall, 40.8% and 16.8%, respectively; P<0.001). Similar trends were seen for both the experienced and inexperienced groups (all P<0.001) (Supplementary Tables 1, 2). Moreover, the proportions of change were more significant in the inexperienced group (experienced 35.4% vs. inexperienced 46.2% in simultaneous reading, P<0.001). The conversion rates for breast masses that were initially BI-RADS 2 or 3 to BI-RADS higher than 4a or vice versa, were also significantly higher in simultaneous reading than in sequential reading (overall, 15.8% to 6.2%, respectively; P<0.001). Similar trends were seen for both the experienced and inexperienced groups (all P<0.001) (Supplementary Tables 1, 2).

Interobserver Agreement for Descriptors and Assessments According to BI-RADS

Table 4 summarizes the agreement of US descriptors and final assessment categories between radiologists and AI-CAD according to the different workflows. For most descriptors (echogenicity, shape, margin, orientation, and posterior features), agreement between the six radiologists increased regardless of experience level in both sequential and simultaneous reading. Inexperienced radiologists showed better agreement for all BI-RADS descriptors (echogenicity, shape, margin, orientation, and posterior features) in simultaneous reading than in sequential reading (all P<0.001). The agreement for the final assessments significantly increased for both sequential and simultaneous reading in the inexperienced group (P=0.010 and P<0.001, respectively), while significantly lower agreement was seen for both workflows in the experienced group (P=0.042 and 0.023, respectively).

In an analysis of agreement between radiologists and AI-CAD, the agreement for descriptors and final assessments improved in both workflows.

Discussion

The results of the present study show that with the aid of AI-CAD, specificity, PPV, and accuracy significantly improved regardless of radiologists’ experience level. These results are consistent with previous studies that also found significantly improved specificity and PPV with the same AI-CAD program [6,8,13-15]. However, the AUC did not significantly improve after AI-CAD was implemented, except in simultaneous reading with inexperienced radiologists. Some earlier studies found significantly improved AUC when AI-CAD was used for breast US, and this was particularly observed when AI-CAD was used to assist inexperienced radiologists [10,13,14], who initially showed significantly lower diagnostic performance than experienced radiologists without AI-CAD. However, the overall AUCs for both the inexperienced and experienced groups in the present study were higher than reported in previous studies (0.868 and 0.922, respectively), which might limit the range of potential improvement after AI-CAD application. This difference from previous studies may be due to the type and number of images selected for review in this study, as previous studies used video clips for image analysis or pre-selected the CAD interpretation results [13,14], whereas the present study used representative still-images of breast masses with the AI-CAD analysis being performed individually by radiologists.

Currently, there are no guidelines on how AI-CAD should be implemented in breast US interpretation. Therefore, this study compared two different workflows: sequential and simultaneous reading. Sequential reading simulates a workflow where radiologists perform and interpret US examinations, while simultaneous reading simulates a workflow where sonographers perform US examinations first, and interpreting radiologists review the scanned images using the results of AI-CAD analysis. The results of this study suggest that AI-CAD may work better where radiologists interpret scans performed by sonographers, especially for inexperienced radiologists, a finding that clinicians should consider when implementing AI-CAD for breast US in practice. In addition to the clinical workflow, the two workflows can be considered in terms of technical availability: sequential reading simulates using AI-CAD embedded on a picture Archiving and Communication Systems), while simultaneous reading simulates using AI-CAD embedded on US equipment in real-time examinations. How to effectively implement AI-CAD in this workflow is as complex as the heterogeneity of the workflow itself, and along with these results, the authors anticipate that future studies will provide guidelines on how to effectively integrate AI-CAD for breast US according to different workflows.

The results of the present study showed that specificity, PPV, and accuracy were higher in simultaneous reading than in sequential reading, regardless of the radiologists’ experience level. In addition, the AUC of the inexperienced radiologists significantly increased in simultaneous reading (0.868 to 0.904, P=0.027). A previous study that compared the two different workflows in breast US using a different AI-CAD platform found results similar to these, in that AI-CAD proved to be more beneficial in simultaneous reading for both experienced and inexperienced radiologists [7]. The differences in performance according to sequential and simultaneous reading may be due to (1) radiologists’ less flexible acceptance of contrary results by AI-CAD after they have a certain diagnosis in mind during sequential reading, and (2) the "bandwagon effect," which refers to the tendency to align one’s opinion with AI-CAD [16]. These factors may explain the significant improvement of the AUC in simultaneous reading. These findings indicate that the time point at which the AI-CAD results for breast US are made available can affect radiologists’ diagnostic performance, and this should be considered for the real-world application of AI-CAD.

In addition to diagnostic performance, significantly higher proportions of change were seen for BI-RADS categories in simultaneous reading than in sequential reading, particularly for radiologists in the inexperienced group. Changes in the final assessment from BI-RADS 2 or 3 to BI-RADS higher than 4a or vice versa are important, as they can lead to critical decisions on whether to perform biopsy. The conversion rates were also significantly higher in simultaneous reading than in sequential reading for both experienced and inexperienced radiologists, suggesting that the type of workflow in which AI-CAD is implemented can also influence the clinical management of patients, as was seen in a previous study [10].

Prior studies have reported considerable variability among radiologists in the evaluation of the US BI-RADS lexicon and final assessments [17]. In this study, six radiologists with various levels of experience in breast imaging showed fair to substantial agreement for descriptors and final assessments, which were in the value ranges suggested by previous studies [17]. The overall agreement for all BI-RADS lexicons and final assessments improved with AI-CAD. Moreover, simultaneous reading with AI-CAD showed higher agreement between radiologists for shape, margin, orientation, posterior features, and the final assessments. However, when radiologists were subgrouped according to experience level, the agreement for most BI-RADS lexicon items did not significantly increase, or even slightly decreased, for the final assessments made by experienced radiologists. The agreement in this study was generally lower than in previous studies, in which AI-CAD improved the agreement between radiologists for final assessments [8,10,13], due to the categorization/subcategorization of BI-RADS 4 and the inclusion of many radiologists from different training backgrounds and institutions.

This study has several limitations. The most notable one is its retrospective data collection from a single institution. However, in order to reflect real-world practice, breast images were selected from a consecutive population according to the benign-malignant ratio and the proportion of BI-RADS final assessments found for real-time US in preceding research using AI-CAD [10]. Second, pre-selected static images of breast masses were analyzed. An analysis of video clips that includes a series of images of the entire breast lesion may result in higher interobserver variability arising from the selection of the representative image. This may affect the diagnostic performance and interobserver agreement in a multi-reader study. Third, the same set of images was used for sequential and simultaneous reading. Although there was a 4-week washout period between the two reading processes, some images may have stuck in the radiologists’ memory, and this might have affected their assessments. Last, using the cutoff of BI-RADS 3/4a for a binary classification may have influenced the calculated diagnostic performance, and using different cutoffs may have led to different results.

In conclusion, using AI-CAD to interpret breast US improves the specificity, PPV, and accuracy of radiologists regardless of experience level. More improvements may be seen when AI-CAD is implemented in simultaneous reading through better diagnostic performance and agreement between radiologists, especially for inexperienced radiologists.

Notes

Author Contributions

Conceptualization: Yoon JH. Data acquisition: Youk JH, Lee JE, Hwang JY, Rho M, Yoon J, Yoon JH. Data analysis or interpretation: Lee SE, Han K, Yoon JH. Drafting of the manuscript: Lee SE. Critical revision of the manuscript: Lee SE, Han K, Youk JH, Lee JE, Hwang JY, Rho M, Yoon J, Yoon JH. Approval of the final version of the manuscript: all authors.

No potential conflict of interest relevant to this article was reported.

Supplementary Material

Supplementary Data 1.

Basic information of S-Detect for breast (https://doi.org/10.14366/usg.22014).

Supplementary Table 1.

Distribution of changes in final assessments (https://doi.org/10.14366/usg.22014).

Supplementary Table 2.

The range of changes in final assessments, calculated as the percentage of number of cases with changed final assessments per total number of cases reviewed by the radiologists (https://doi.org/10.14366/usg.22014).

References

Article information Continued

Notes

Key point

Artificial intelligence-based computer-assisted diagnosis (AI-CAD) can improve the specificity, positive predictive value, and accuracy of radiologists in diagnosing breast cancer on ultrasonography. Inexperienced radiologists may have more benefits in terms of improving the area under the curve. AI-CAD may work better in simultaneous reading to improve diagnostic performance and agreement between radiologists than in sequential reading.